Chain-of-Thought vs Direct Output

Which Approach Enhances LLM Interpretability?

Research Hypothesis

Chain-of-Thought prompting is hypothesized to improve interpretability in LLMs compared to Direct Output generation, especially for complex, multi-step problem-solving tasks.

Key Focus Areas

- Transparency enhancement

- Trust building mechanisms

- Explainability improvements

Defining Interpretability in the Context of LLMs

Key Aspects of Interpretability: Transparency, Trust, and Explainability

Interpretability in Large Language Models (LLMs) is a multifaceted concept crucial for their responsible deployment, encompassing transparency, trust, and explainability. Transparency refers to the extent to which a user can understand the internal mechanisms and decision-making processes of an LLM. However, the inherent complexity and "black box" nature of modern LLMs, often with billions of parameters, make achieving full transparency a significant challenge [93] [96].

Trust is built when users can rely on the model's outputs and believe in its reasoning, which is heavily influenced by how well they can interpret and verify its decisions. Explainability, a key component of interpretability, focuses on providing human-understandable reasons for the model's outputs, often through post-hoc analysis or by designing models that inherently produce explanations [93] [96].

The Role of Natural Language Explanations

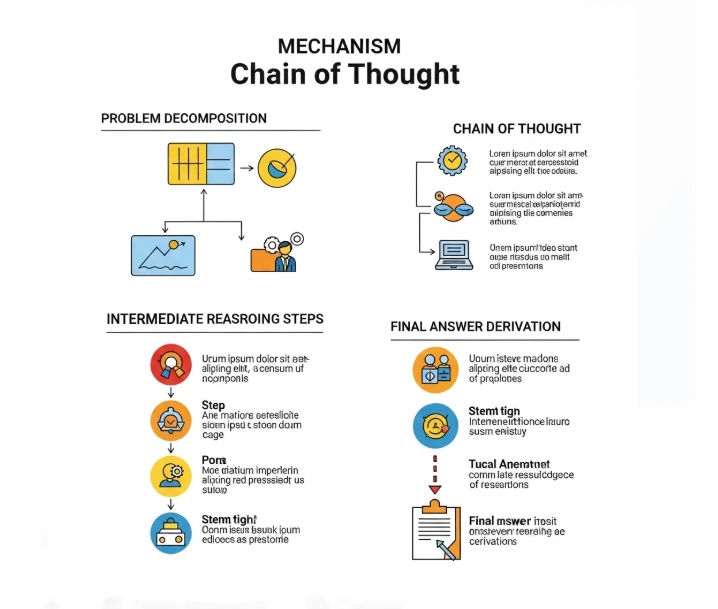

Natural language explanations (NLEs), including rationales and Chain-of-Thought (CoT) prompting, play a pivotal role in enhancing the interpretability of Large Language Models (LLMs) by providing human-readable justifications for their outputs [94] [96]. CoT prompting, a specific technique for eliciting NLEs, instructs the LLM to generate intermediate reasoning steps before arriving at a final answer, effectively "showing its work."

Advanced CoT Techniques

- Guided CoT Templates: Provide structured, predefined frameworks of logical steps to steer model reasoning [26]

- ReAct (Reasoning and Acting): Integrates task-specific actions with step-by-step reasoning [26]

- Explicit CoT: Decomposed reasoning and response generation for clearer, systematic reasoning [27]

Experimental Design: Comparing CoT and Direct Output

Task Selection: Complex, Multi-Step Problem Solving

The selection of appropriate tasks is crucial for investigating the comparative interpretability of Chain-of-Thought (CoT) prompting versus Direct Output generation. The core objective is to evaluate these approaches in scenarios that demand complex, multi-step problem-solving, as these are the contexts where the benefits of CoT are hypothesized to be most pronounced.

Participant Group: Students

The participant group for this study will consist of students. This choice is motivated by several factors relevant to the research question. Students represent a key demographic that increasingly interacts with AI-powered tools for learning and problem-solving.

Rationale for Student Participants

Educational Context

Cognitive Alignment

Future Users

Prompting Strategies

The experiment will compare two primary prompting strategies for LLMs: Chain-of-Thought (CoT) prompting and Direct Output generation. The goal is to assess their relative impact on the interpretability of the model's responses to complex, multi-step problems.

| Feature | Chain-of-Thought (CoT) Prompting | Direct Output Generation |

|---|---|---|

| Objective | Elicit explicit, step-by-step reasoning before the final answer [26] | Obtain only the final answer without intermediate steps [62] |

| Mechanism | Instructs LLM to "think step by step," show calculations [62] [88] | Presents the problem and asks for the solution directly [62] |

| Output Structure | Intermediate reasoning steps in natural language + final answer [27] | Only the final answer is provided |

| Expected Benefit | Enhanced transparency, trust, and explainability [49] [63] | Brevity and speed; potentially preferred for simple tasks |

CoT Example Prompt

"You are a helpful assistant. Solve the problem step by step, showing all your calculations. Finally, provide the answer."

Direct Output Example

"You are a helpful assistant. Solve the problem and provide the final answer."

Metrics for Evaluating Interpretability

The evaluation of interpretability in LLMs will employ both objective and subjective metrics to provide a comprehensive understanding of the impact of CoT versus Direct Output. This multi-faceted approach allows for the assessment of not only the semantic quality of the explanations but also the user's perception and experience.

| Metric Category | Metric Name | Description | How it Measures Interpretability |

|---|---|---|---|

| Objective | Answer Semantic Similarity (ASS) | Measures semantic alignment using embeddings (cosine similarity) [93] | Higher similarity indicates better alignment with expected reasoning [93] |

| Objective | LLM-based Accuracy (Acc) | Uses evaluator LLM to judge factual correctness [93] | Assesses factual correctness against known standard [93] |

| Objective | LLM-based Completeness (Cm) | Uses evaluator LLM to judge coverage of key points [93] | Assesses thoroughness against known standard [93] |

| Subjective | Student Perceptions | Likert-scale surveys on clarity, trustworthiness, completeness [22] [36] | Captures user experience and judgment [15] [79] |

Objective Metric: Answer Semantic Similarity (ASS)

Answer Semantic Similarity (ASS) is an objective metric used to evaluate the interpretability of Large Language Models by measuring how closely the meaning of a model-generated response aligns with a ground-truth answer or explanation [93]. This metric typically involves generating vector representations (embeddings) for both the LLM's output and the reference text using a pre-trained language model encoder.

LLM-based Evaluation Metrics

Accuracy (Acc)

Factual correctness assessment

Completeness (Cm)

Key points coverage

Validation (LAV)

Binary correctness judgment

Subjective Metric: Student Perceptions via Likert-Scale Surveys

The evaluation of interpretability extends beyond objective metrics and necessitates an understanding of the user's subjective experience. Student perceptions will be gathered using Likert-scale surveys, allowing for the quantification of subjective qualities such as clarity, trustworthiness, and completeness of the AI-generated explanations.

Clarity

Ease of understanding, simplicity of language, logical flow, and absence of ambiguity [36]

Trustworthiness

User confidence in AI's explanation, influenced by logical soundness and consistency [46] [49]

Completeness

Coverage of all necessary steps and information without omitting critical reasoning parts [46]

Statistical Analysis of Interpretability Scores

The statistical analysis of interpretability scores will be crucial for drawing meaningful conclusions from the experimental data. The primary goal is to determine if there are statistically significant differences in interpretability between Chain-of-Thought (CoT) prompting and Direct Output generation, as perceived by student participants and measured by objective metrics.

Within-Subjects Design

Each student evaluates both CoT and Direct Output for different problems in counterbalanced order.

Recommended Test: Wilcoxon signed-rank test

Between-Subjects Design

One group evaluates CoT outputs, another group evaluates Direct Output outputs.

Recommended Test: Mann-Whitney U test

Why Non-Parametric Tests?

Even with interval data like cosine similarity scores, non-parametric tests might be preferred if the data is not normally distributed. While cosine similarity produces values between -1 and 1, their distribution may not be normal, especially with smaller sample sizes.

Non-parametric tests offer a more conservative and distribution-free approach, ensuring the validity of statistical conclusions.

Expected Outcomes and Implications

Hypothesized Advantages of CoT for Interpretability

It is hypothesized that Chain-of-Thought (CoT) prompting will significantly improve the interpretability of LLMs compared to Direct Output generation, particularly for complex, multi-step problem-solving tasks. The explicit articulation of intermediate reasoning steps in CoT is expected to lead to higher perceived clarity, trustworthiness, and completeness.

Expected CoT Benefits

- • Higher perceived clarity through logical progression

- • Greater trustworthiness via inspectable reasoning

- • Enhanced completeness of problem-solving process

- • Improved Answer Semantic Similarity scores

- • Better understandability and helpfulness for learning

Direct Output Contexts

- • Brevity and speed advantages

- • Preferred for straightforward tasks

- • Time-sensitive situations

- • When user confidence is high

- • Avoidance of cognitive overload

Implications for LLM Design and User Interaction

The findings from this research will have significant implications for the design of LLMs and user interaction paradigms. If CoT is consistently shown to improve interpretability, it could lead to its wider adoption as a standard prompting technique or even an inherent feature in LLMs designed for tasks requiring transparency and explainability.

Educational AI Tools

Showing "working out" can be crucial for student learning and understanding of complex concepts.

Personalized Assistants

AI systems that adapt explanation style based on user preferences, expertise, or query complexity.

Evaluation Metrics

Better understanding of CoT aspects that contribute most to interpretability for improved evaluation.

Follow Europeans24 or search on Google for more!